How the Brain Processes Visual Information in Videos

Nowadays, video content dominates our screens, from social media reels to online courses and entertainment. But have you ever wondered how your brain processes all the visuals, motion, and sound in a video? Unlike static images, videos engage multiple parts of the brain simultaneously, creating an immersive experience that captivates audiences.

Understanding how the brain perceives and processes video information is crucial for content creators, video editors, and marketers. Additionally, advancements in video editing software, artificial intelligence (AI) video generators, and text-to-voice technology have revolutionized how videos are produced and consumed.

In this post, we’ll see how the brain processes visual information in videos, the role of video editing in optimizing viewer engagement, and how AI tools like text-to-voice enhance content accessibility.

The Science Behind Visual Processing in Videos

How the Brain Perceives Motion and Frames

The human brain processes videos by rapidly interpreting a series of still images presented in quick succession. This phenomenon, known as the phi effect, allows us to perceive motion even though videos are technically a sequence of static frames. The visual cortex, located at the back of the brain, plays a crucial role in interpreting movement and predicting future actions based on past frames.

Moreover, the middle temporal (MT) area of the brain is responsible for detecting motion direction and speed. This explains why smooth transitions and high frame rates (such as 60 fps) make videos appear more fluid, while lower frame rates (such as 24 fps) may feel more cinematic but slightly choppier.

Color and Contrast in Visual Perception

Color is another critical aspect of how the brain processes video. The cones in our retinas detect different wavelengths of light, allowing us to perceive colors. The brain then processes these signals in the visual cortex, which helps us distinguish objects and understand depth.

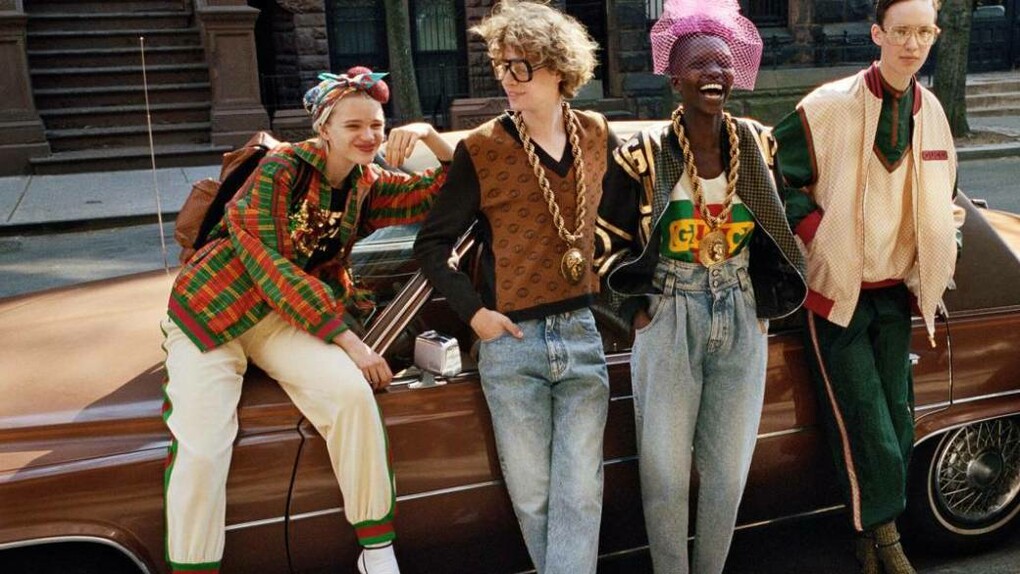

Filmmakers and video editors use color grading to evoke emotions and guide the viewer’s attention. For example, warm colors like red and orange can create excitement, while cool colors like blue and green evoke calmness. High-contrast visuals, such as dark backgrounds with bright text, enhance readability and comprehension.

The Role of Audio in Video Processing

While visuals are essential, sound plays a significant role in how we interpret videos. The auditory cortex, located in the temporal lobe, works alongside the visual cortex to synchronize audio with motion. This synchronization, known as audio-visual integration, ensures that we perceive lip movements and speech as a single event rather than separate components.

This is why well-edited videos with precise audio cues feel more natural, whereas poorly synchronized audio can create a jarring experience. Sound effects and background music further enhance engagement by reinforcing emotions and guiding the viewer’s focus.

How Video Editing Enhances Brain Engagement

Role of Editing in Storytelling

Video editing is more than just cutting and arranging clips—it’s about shaping a narrative that keeps viewers engaged. Various editing techniques, such as jump cuts, slow motion, and montage sequences, manipulate the brain’s perception of time and motion.

For instance, jump cuts create a sense of urgency by skipping unnecessary moments, while slow motion allows viewers to focus on crucial details. Transitions, such as fades and wipes, help maintain continuity and prevent cognitive overload by ensuring smooth scene changes.

Use of Text-to-Voice in Video Creation

One of the latest trends in video editing is the use of text to voice AI tools. These tools allow creators to convert written scripts into natural-sounding voiceovers, eliminating the need for expensive recording equipment or voice actors. The brain processes spoken language more efficiently than reading text, making AI-generated voiceovers a valuable asset for explainer videos, online courses, and social media content.

Popular text-to-voice software includes CapCut, Murf AI, and ElevenLabs, each offering various voice styles and customization options. By using text-to-voice, creators can enhance accessibility and improve audience retention.

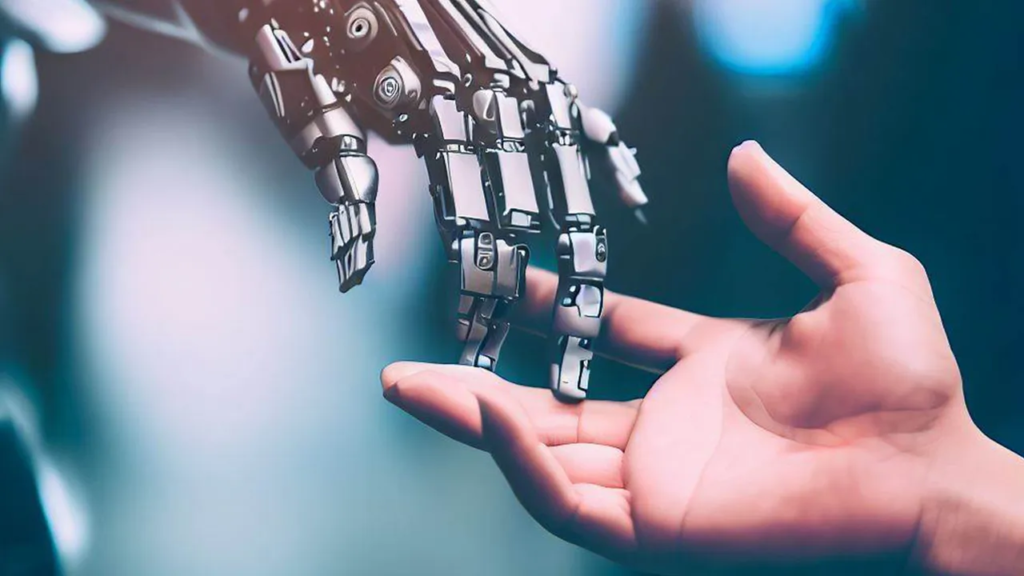

AI Video Generators: The Future of Content Creation

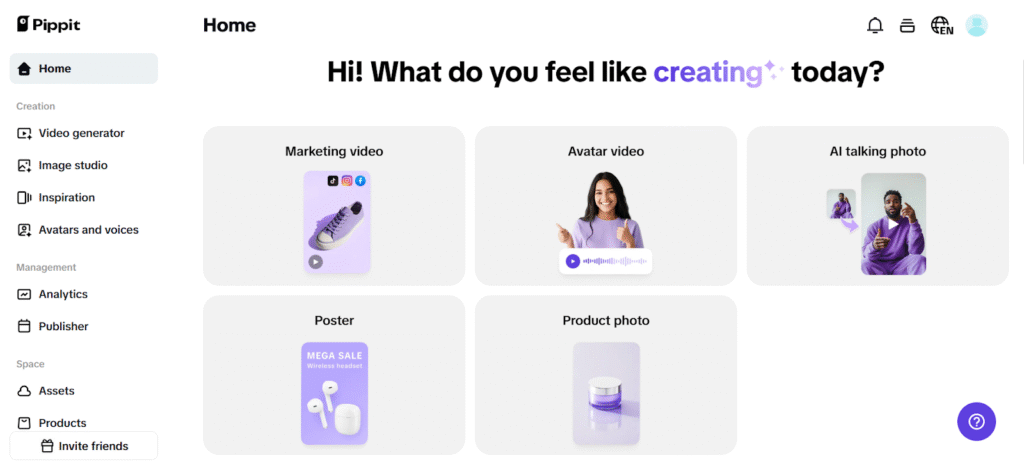

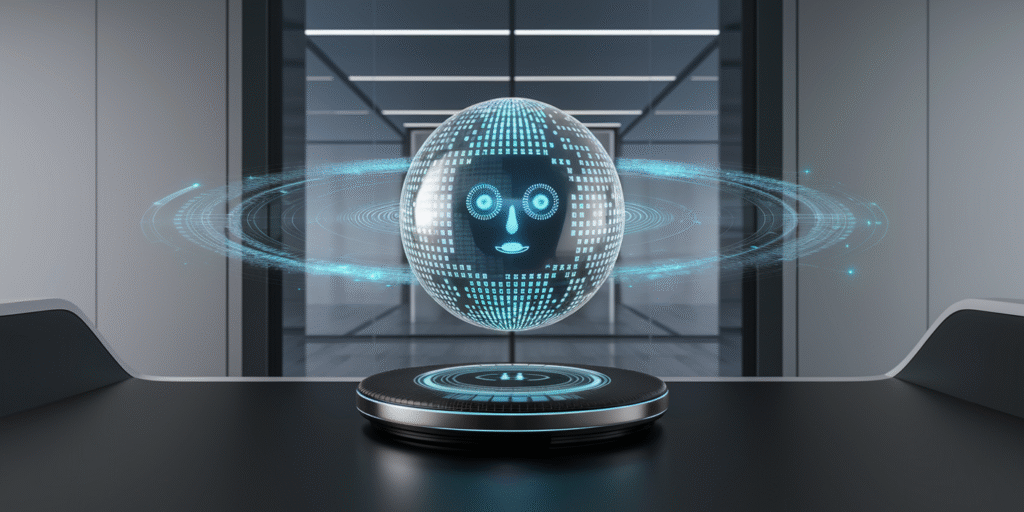

AI video generator tools use deep learning to transform text into fully animated videos. These tools analyze scripts and generate visuals, animations, and voiceovers automatically, significantly reducing production time.

AI-generated videos are particularly useful for businesses and educators who need to produce high-quality content quickly. The brain perceives AI-generated visuals similarly to traditional videos, provided they are well-designed and engaging. As AI continues to evolve, it will likely play a larger role in shaping the future of video content.

Step-by-Step Guide: Converting Text to Voice Using CapCut

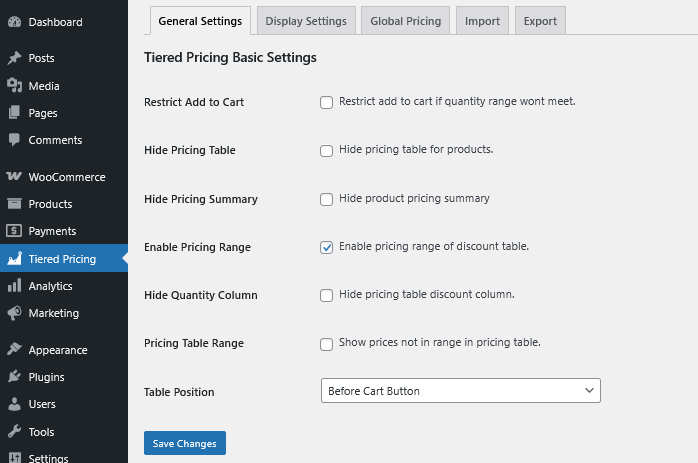

If you’re looking to enhance your videos with AI-generated voiceovers, CapCut desktop video editor, one of the most efficient video editing software, offers a simple and effective way to do it. Follow these steps to convert text into voice using CapCut’s desktop version:

Step 1: Import Video into CapCut

Open CapCut on your desktop and start a New Project. Then, click on Import and select your video file. Drag and drop the video onto the timeline.

Step 2: Convert Text to Voice

Click on the Text tab in the left-hand menu. Select Add Text and type or paste your script into the text box. Click on the Text-to-Speech option. Choose a voice style from the available options (e.g., male, female, robotic, or natural). Adjust settings such as pitch, speed, and volume to fit your video’s tone.

Click Generate Voice, and CapCut will process the text into an AI-generated voiceover.

Step 3: Export the Video

Preview the video to ensure synchronization and clarity. Click the Export button at the top-right corner. Choose your preferred resolution, frame rate, and file format. Again, click Export, and your video will be saved with the AI-generated voiceover.

Conclusion

The way the brain processes visual information in videos is a complex yet fascinating subject. From motion perception to color interpretation and audio synchronization, multiple cognitive processes work together to create a seamless viewing experience.

For video creators, leveraging editing techniques, AI tools, and text-to-voice technology can significantly enhance engagement and accessibility. As AI continues to revolutionize content creation, tools like CapCut’s text-to-voice feature and AI-generated video platforms will become indispensable in the digital landscape.

Understanding these processes allows creators to craft more engaging, high-quality videos that captivate audiences and leave a lasting impact. Whether you’re a filmmaker, marketer, or educator, integrating AI-powered video tools will help you stay ahead in the ever-evolving world of video content creation.